A Sequence of Continuous Functions is Not Necessarily Continuous

6.2: Sequences and Continuity

- Page ID

- 7952

- Further explanation of sequences and continuity

There is an alternative way to prove that the function

\[D(x) = \begin{cases} x & \text{ if x is rational } \\ 0 & \text{ if x is irrational } \end{cases}\]

is not continuous at \(a \neq 0\). We will examine this by looking at the relationship between our definitions of convergence and continuity. The two ideas are actually quite closely connected, as illustrated by the following very useful theorem.

The function \(f\) is continuous at \(a\) if and only if \(f\) satisfies the following property:

\[\forall \textit{ sequences} (x_n), \textit{ if } \lim_{n \to \infty }x_n = a \textit{ then } \lim_{n \to \infty }f(x_n) = f(a)\]

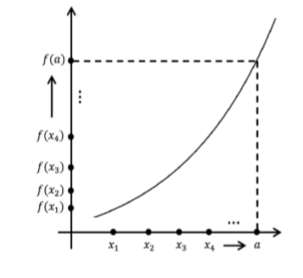

Theorem \(\PageIndex{1}\) says that in order for \(f\) to be continuous, it is necessary and sufficient that any sequence (\(x_n\)) converging to a must force the sequence (\(f(x_n)\)) to converge to \(f(a)\). A picture of this situation is below though, as always, the formal proof will not rely on the diagram.

Figure \(\PageIndex{1}\): A picture for the situation described in Theorem \(\PageIndex{1}\).

This theorem is especially useful for showing that a function \(f\) is not continuous at a point \(a\); all we need to do is exhibit a sequence (\(x_n\)) converging to a such that the sequence \(\displaystyle \lim_{n \to \infty }f(x_n)\) does not converge to \(f(a)\). Let's demonstrate this idea before we tackle the proof of Theorem \(\PageIndex{1}\).

Use Theorem \(\PageIndex{1}\) to prove that

\[f(x) = \begin{cases} \frac{\left | x \right |}{x} & \text{ if } x\neq 0 \\ 0 & \text{ if } x= 0 \end{cases} \nonumber\]

is not continuous at \(0\).

Proof:

First notice that \(f\) can be written as

\[f(x) = \begin{cases} 1 & \text{ if } x > 0 \\ -1 & \text{ if } x < 0 \\ 0 & \text{ if } x= 0 \end{cases} \nonumber\]

To show that \(f\) is not continuous at \(0\), all we need to do is create a single sequence (\(x_n\))which converges to \(0\), but for which the sequence (\(f(x_n)\)) does not converge to \(f(0) = 0\). For a function like this one, just about any sequence will do, but let's use \(\left (\frac{1}{n} \right )\), just because it is an old familiar friend.

We have

\[\lim_{n \to \infty }\frac{1}{n} = 0 \nonumber\]

but

\[\lim_{n \to \infty }f \left (\frac{1}{n} \right ) = \lim_{n \to \infty }1 = 1 \neq 0 =f(0). \nonumber\]

Thus by Theorem \(\PageIndex{1}\), \(f\) is not continuous at \(0\).

Use Theorem \(\PageIndex{1}\) to show that

\[f(x) = \begin{cases} \frac{\left | x \right |}{x} & \text{ if } x\neq 0 \\ a & \text{ if } x= 0 \end{cases} \nonumber\]

is not continuous at \(0\), no matter what value \(a\) is.

Use Theorem \(\PageIndex{1}\) to show that

\[D(x) = \begin{cases} x & \text{ if x is rational } \\ 0 & \text{ if x is irrational } \end{cases} \nonumber\]

is not continuous at \(a \neq = 0\).

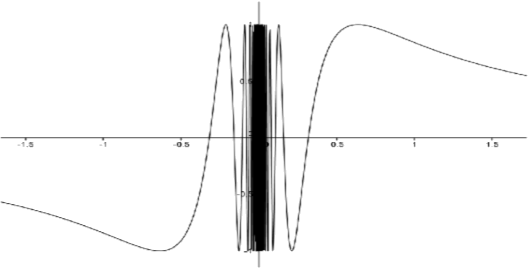

The function \(T(x) = \sin \left ( \frac{1}{x} \right )\) is often called the topologist's sine curve. Whereas \(\sin x\) has roots at \(nπ\), \(n ∈ \mathbb{Z}\) and oscillates infinitely often as \(x \to \pm \infty\), \(T\) has roots at \(\frac{1}{n\pi }\), \(n ∈ \mathbb{Z}\), \(n \neq 0\), and oscillates infinitely often as \(x\) approaches zero. A rendition of the graph follows.

Figure \(\PageIndex{2}\): Graph of \(T(x)\) as defined above.

Notice that \(T\) is not even defined at \(x = 0\). We can extend \(T\) to be defined at \(0\) by simply choosing a value for \(T(0)\) :

\[T(x) = \begin{cases} \sin \left ( \frac{1}{x} \right ) & \text{ if } x \neq 0 \\ b & \text{ if } x= 0 \end{cases} \nonumber\]

Use Theorem \(\PageIndex{1}\) to show that \(T\) is not continuous at \(0\), no matter what value is chosen for \(b\).

Sketch of Proof:

We've seen how we can use Theorem \(\PageIndex{1}\), now we need to prove Theorem \(\PageIndex{1}\). The forward direction is fairly straightforward. So we assume that \(f\) is continuous at \(a\) and start with a sequence (\(x_n\)) which converges to \(a\). What is left to show is that \(\lim_{n \to \infty }f(x_n) = f(a)\). If you write down the definitions of \(f\) being continuous at \(a\), \(\lim_{n \to \infty }x_n = a\), and \(\lim_{n \to \infty }f(x_n) = f(a)\), you should be able to get from what you are assuming to what you want to conclude.

To prove the converse, it is convenient to prove its contrapositive. That is, we want to prove that if \(f\) is not continuous at \(a\) then we can construct a sequence (\(x_n\)) that converges to \(a\) but (\(f(x_n)\))does not converge to \(f(a)\). First we need to recognize what it means for \(f\) to not be continuous at \(a\). This says that somewhere there exists an \(ε > 0\), such that no choice of \(δ > 0\) will work for this. That is, for any such \(δ\), there will exist \(x\), such that \(|x-a| < δ\), but \(|f(x)-f(a)|≥ ε\). With this in mind, if \(δ = 1\), then there will exist an \(x_1\) such that \(|x_1 - a| < 1\), but \(|f(x_1) - f(a)| ≥ ε\). Similarly, if \(δ = \frac{1}{2}\), then there will exist an \(x_2\) such that \(|x_2 - a| < \frac{1}{2}\), but \(|f(x_2) - f(a)| ≥ ε\). If we continue in this fashion, we will create a sequence (\(x_n\)) such that \(|x_n - a| < \frac{1}{n}\), but \(|f(x_n) - f(a)|≥ ε\). This should do the trick.

Turn the ideas of the previous two paragraphs into a formal proof of Theorem \(\PageIndex{1}\).

Theorem \(\PageIndex{1}\) is a very useful result. It is a bridge between the ideas of convergence and continuity so it allows us to bring all of the theory we developed in Chapter 4 to bear on continuity questions. For example consider the following.

Suppose \(f\) and \(g\) are both continuous at \(a\). Then \(f + g\) and \(f \cdot g\) are continuous at \(a\).

We could use the definition of continuity to prove Theorem \(\PageIndex{2}\), but Theorem \(\PageIndex{1}\) makes our job much easier. For example, to show that \(f + g\) is continuous, consider any sequence (\(x_n\)) which converges to \(a\). Since \(f\) is continuous at \(a\), then by Theorem \(\PageIndex{1}\), \(\lim_{n \to \infty }f(x_n) = f(a)\). Likewise, since \(g\) is continuous at \(a\), then \(\lim_{n \to \infty }g(x_n) =g (a)\). By Theorem 4.2.1 of Chapter 4, \(\lim_{n \to \infty }(f + g)(x_n) = \lim_{n \to \infty }\left (f(x_n) + g(x_n) \right ) = \lim_{n \to \infty }f(x_n) + \lim_{n \to \infty }g(x_n) = f(a) + g(a) = (f+g)(a)\). Thus by Theorem \(\PageIndex{1}\), \(f + g\) is continuous at \(a\). The proof that \(f \cdot g\) is continuous at a is similar.

Use Theorem \(\PageIndex{1}\) to show that if \(f\) and \(g\) are continuous at \(a\), then \(f \cdot g\) is continuous at \(a\).

By employing Theorem \(\PageIndex{2}\) a finite number of times, we can see that a finite sum of continuous functions is continuous. That is, if \(f_1, f_2, ..., f_n\) are all continuous at \(a\) then \(\sum_{j=1}^{n} f_j\) is continuous at \(a\). But what about an infinite sum? Specifically, suppose \(f_1, f_2, f_3,...\) are all continuous at \(a\). Consider the following argument.

Let \(ε > 0\). Since \(f_j\) is continuous at \(a\), then there exists \(δ_j > 0\) such that if \(|x - a| < δ_j\), then \(|f_j(x) - f_j(a)| < \frac{ε}{2^j}\). Let \(δ = \min (δ_1, δ_2, ...)\). If \(|x - a| < δ\),then

\[\left | \sum_{j=1}^{\infty } f_j(x) - \sum_{j=1}^{\infty } f_j(a) \right | = \left | \sum_{j=1}^{\infty } \left (f_j(x) - f_j(a) \right ) \right | \leq \sum_{j=1}^{\infty }\left | (f_j(x) - f_j(a) \right | < \sum_{j=1}^{\infty } \frac{\varepsilon }{2^j} = \varepsilon\]

Thus by definition, \(\sum_{j=1}^{\infty } f_j\) is continuous at \(a\).

This argument seems to say that an infinite sum of continuous functions must be continuous (provided it converges). However we know that the Fourier series

\[\frac{4}{\pi }\sum_{k=0}^{\infty } \frac{(-1)^k}{(2k+1)} \cos \left ( (2k+1)\pi x \right )\]

is a counterexample to this, as it is an infinite sum of continuous functions which does not converge to a continuous function. Something fundamental seems to have gone wrong here. Can you tell what it is?

This is a question we will spend considerable time addressing in Chapter 8 so if you don't see the difficulty, don't worry; you will. In the meantime keep this problem tucked away in your consciousness. It is, as we said, fundamental.

Theorem \(\PageIndex{1}\) will also handle quotients of continuous functions. There is however a small detail that needs to be addressed first. Obviously, when we consider the continuity of \(f/g\) at \(a\),we need to assume that \(g(a) \neq 0\). However, \(g\) may be zero at other values. How do we know that when we choose our sequence (\(x_n\)) converging to a that \(g(x_n)\) is not zero? This would mess up our idea of using the corresponding theorem for sequences (Theorem 4.2.3 from Chapter 4). This can be handled with the following lemma.

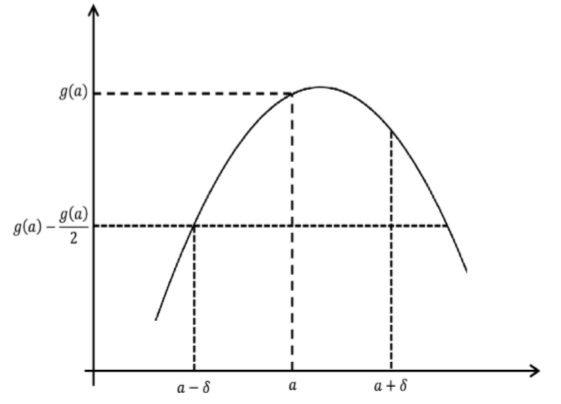

If \(g\) is continuous at \(a\) and \(g(a) \neq 0\), then there exists \(δ > 0\) such that \(g(x) \neq 0\) for all \(x ∈ (a - δ,a + δ)\).

Prove Lemma \(\PageIndex{1}\).

- Hint

-

Consider the case where \(g(a) > 0\). Use the definition with \(ε = \frac{g(a)}{2}\). The picture is below; make it formal.

Figure \(\PageIndex{3}\): Picture for Lemma \(\PageIndex{1}\).

For the case \(g(a) < 0\), consider the function \(-g\).

A consequence of this lemma is that if we start with a sequence (\(x_n\)) converging to \(a\), then for \(n\) sufficiently large, \(g(x_n) \neq 0\).

Use Theorem \(\PageIndex{1}\), to prove that if \(f\) and \(g\) are continuous at \(a\) and \(g(a) \neq 0\), then \(f/g\) is continuous at \(a\).

Suppose \(f\) is continuous at \(a\) and \(g\) is continuous at \(f(a)\). Then \(g \circ f\) is continuous at \(a\). [Note that \((g \circ f)(x) = g(f(x))\).]

Prove Theorem \(\PageIndex{3}\).

- Using the definition of continuity.

- Using Theorem \(\PageIndex{1}\).

The above theorems allow us to build continuous functions from other continuous functions. For example, knowing that \(f(x) = x\) and \(g(x) = c\) are continuous, we can conclude that any polynomial,

\[p(x) = a_nx^n + a_{n-1}x^{n-1} +\cdots + a_1x + a_0\]

is continuous as well. We also know that functions such as \(f(x) = \sin (e^x)\) are continuous without having to rely on the definition.

Show that each of the following is a continuous function at every point in its domain.

- Any polynomial.

- Any rational function. (A rational function is defined to be a ratio of polynomials.)

- \(\cos x\)

- The other trig functions: \(\tan (x), \cot (x), \sec (x), \csc (x)\)

What allows us to conclude that \(f(x) = sin(e^x)\) is continuous at any point a without referring back to the definition of continuity?

Theorem \(\PageIndex{1}\) can also be used to study the convergence of sequences. For example, since \(f(x) = e^x\) is continuous at any point and \(\lim_{n \to \infty }\frac{n+1}{n} =1\), then \(\lim_{n \to \infty }e^{\left (\frac{n+1}{n} \right )} = e\). This also illustrates a certain way of thinking about continuous functions. They are the ones where we can "commute" the function and a limit of a sequence. Specifically, if \(f\) is continuous at \(a\) and \(\lim_{n \to \infty }f(x_n) = f(a) = f(\lim_{n \to \infty }x_n)\).

Compute the following limits. Be sure to point out how continuity is involved.

- \(\lim_{n \to \infty }\sin \left ( \frac{n\pi }{2n+1} \right )\)

- \(\lim_{n \to \infty }\sqrt{\frac{n}{n^2+1}}\)

- \(\lim_{n \to \infty }e^{\left (\sin (\frac{1}{n}) \right )}\)

Having this rigorous formulation of continuity is necessary for proving the Extreme Value Theorem and the Mean Value Theorem. However there is one more piece of the puzzle to address before we can prove these theorems.

We will do this in the next chapter, but before we go on it is time to define a fundamental concept that was probably one of the first you learned in calculus: limits.

Source: https://math.libretexts.org/Bookshelves/Analysis/Book:_Real_Analysis_%28Boman_and_Rogers%29/06:_Continuity_-_What_It_Isn%E2%80%99t_and_What_It_Is/6.02:_Sequences_and_Continuity

Post a Comment for "A Sequence of Continuous Functions is Not Necessarily Continuous"